National Survey for Wales: quality report 2024 to 2025

How the survey adheres to the European Statistical System definition of quality, and provides a summary of methods used to compile the output.

This file may not be fully accessible.

In this page

Introduction and survey methodology

The National Survey for Wales is a large-scale, random-sample telephone survey covering adults aged 16+ across Wales. The survey results are used to inform and monitor Welsh Government policies, as well as by other public sector organisations such as local councils and NHS Wales, voluntary organisations, academics, the media, and members of the public.

Addresses are selected at random, and invitations are sent by post requesting that a phone number be provided for the address. A phone number can be provided via an online portal or a telephone enquiry line. Where no phone number is provided, telematching is undertaken with available databases of phone numbers to see if one can be found for the address.

The interviewer then calls the phone number for the address, establishes how many adults live there, and selects one at random (the person with the next birthday) to take part in the survey. The selected person is interviewed by phone. Once they have completed the phone section, they are asked to complete an online section and details are sent to them.

If no number is obtained for the address then the interviewer makes a visit to the address to select a respondent and either carries out an in-home, face-to-face interview with them or (if a phone interview is preferred) collects a phone number for them.

The survey lasts around 40 to 45 minutes on average and covers a range of topics. Respondents are offered a £15 voucher to say thank you for taking part. In 2024-25 the achieved sample size each month was around 500 people on average, and the response rate was 18.7% of those eligible to take part.

Summary of quality

Relevance

The degree to which the statistical product meets user needs for both coverage and content.

What it measures

The survey covers a broad range of topics such as education, exercise, health, use of the internet, community cohesion, wellbeing, employment, and finances. Topics were updated for the start of 2024-25 in order to meet the latest needs for information.

A range of demographic questions is also included, to allow for detailed cross-analysis of the results.

The survey content and materials are available from the National Survey web pages.

Mode

30 minute telephone interview plus 15 minute online section, or 30 minute face-to-face interview plus 10 minute self-completion section.

Frequency

The 2024-25 results, published in August 2025, are based on interviews carried out between March 2024 and March 2025.

Sample size

An achieved sample of around 500 respondents a month on average. See the 2024-25 technical report for details of the issued and achieved sample.

Periods available

The National Survey has been carried out in most years since 2012 to 2013, with some breaks in data collection to switch survey contractor.

Sample frame

Addresses were sampled randomly from Royal Mail’s small user Postcode Address File, a list of all UK private addresses (excluding institutional accommodation). The address sample was drawn by the Office for National Statistics to ensure that respondents have not recently been selected for a range of other large-scale government surveys, including previous years of the National Survey. Only people aged 16+ were eligible to take part. Proxy interviews were not allowed.

Sample design

The sample of addresses is stratified by local authority. The sample size in each local authority is broadly proportionate to local authority population size but is designed to achieve a minimum achieved sample size of 250 in the smallest authorities and 600 in Powys.

In cases where there was more than one household found at an address, the household either self-selected by responding to the letter or (if an interviewer visited) one household was chosen using a random selection procedure. In each household, the respondent was then randomly selected from all adults (aged 16 or over) in the household who regard the sample address as their main residence, regardless of how long they have lived there. Random selection within the household was undertaken using the ‘next birthday’ method. That is, the person who the interviewer first spoke to was asked which adult in the household has the next birthday; that person was then selected to take part. The ‘next birthday’ method is a long-established method for achieving an acceptably random sample in telephone surveys, while minimising the amount of potentially sensitive information about the household that has to be obtained at first contact.

Weighting

Results were weighted to take account of unequal selection probabilities and for differential non-response, i.e. to ensure that the age and sex distribution of the responding sample matches that of each local authority. Additionally, the weighting was designed to compensate for the uneven achievement of interviews by quarter.

Imputation

No imputation.

Outliers

No filteing outliers.

Primary purpose

The main purpose of the survey is to provide information on the views and behaviours of people across Wales, covering a wide range of topics relating to them and their local area.

The results help public sector organisations to:

- make decisions that are based on sound evidence

- monitor changes over time

- identify areas of good practice that can be implemented more widely

- identify areas or groups that would benefit from intensive local support, so action can be targeted as effectively as possible

Users and uses

The survey is commissioned and used to help with policy decisions by the Welsh Government, Sport Wales, Natural Resources Wales, and Arts Council of Wales. As well as these organisations, there is a wide range of other users of the survey including: local authorities across Wales, NHS Wales, and Public Health Wales; other UK government departments and local government organisations; other public sector organisations; academics; the media; members of the public; and the voluntary sector, particularly organisations based in Wales.

The latest data is deposited each autumn at the UK Data Archive, to ensure that the results are widely accessible for research purposes. Results are also linked with other datasets via secure research environments, for example the Secure Anonymised Information Linkage databank (SAIL) at Swansea University. Respondents are able to opt out of having their results linked if they wish.

Strengths and limitations

Strengths of the 2024-25 National Survey

- A randomly-selected sample.

- Coverage of a wide range of topics, allowing cross-analyses between topics to be undertaken. A range of demographic questions were also included to allow cross-analysis by age, sex, employment status, etc.

- Where possible, questions were selected that have been used in previous years of the National Survey and in other major surveys. This means that they are tried and tested, and that some results can be compared over time and with other countries. Where necessary, questions from other sources were adapted (typically shortened) to ensure that they work well by telephone or online.

- Questions were developed by survey experts, peer-reviewed by the survey contractor, and cognitively tested with members of the public before fieldwork began.

- The interviewer-led section of the survey (and where necessary the self-completion section) was carried out by telephone for most respondents, allowing people to take part who do not use the internet or who have lower levels of literacy. The telephone mode appears to be as accessible as face-to-face mode for people with hearing impairments, given that the proportion of respondents reporting hearing impairments did not fall when in 2020 the primary mode switched to telephone from face-to-face.

- For a proportion of addresses (just under half) which do not provide a phone number, an interviewer visited the address to make contact and offer an in-home face-to-face interview, or a telephone interview if preferred. Where the interview was face-to-face, the follow-up section took place as a self-completion on the interviewer’s tablet computer. This mixture of modes allowed some choice for respondents over how they participate and meant that a higher response rate was achieved than if only telephone plus online completion had been offered, while reducing survey costs compared with a fully face-to-face design.

- Having an interviewer administering the survey helps to ensure that all relevant questions are answered. It also allows interviewers to read out introductions to questions and to help ensure respondents understand what is being asked (but without deviating from the question wording), so that respondents can give accurate answers.

- For respondents who took part by telephone, the second of the two survey sections was offered online (but if necessary the interview could continue by phone, e.g. if the respondent doesn’t use the internet).

- The self-completion section of the survey works well for more sensitive topics or ones where the involvement of an interviewer could affect responses. It also works well for presenting of longer lists of information, and where comparability with other online surveys is wanted.

- The survey is weighted to adjust for non-response, which helps to make the results more representative.

- The results were published relatively quickly after the end of fieldwork, within around five months. Large numbers of results tables are available in an interactive viewer. See National Survey for Wales for publications.

- Use can be made of linked records (that is, survey responses can be analysed in the context of other administrative and survey data that is held about the relevant respondents).

Limitations of the survey

- The achieved sample size for 2024-25 (6,000 respondents) is smaller than originally planned, and so for this year it will not be possible to produce breakdowns by local authority and for smaller subgroups.

- A substantial proportion of the individuals sampled did not take part, as is generally the case with social surveys of this design. The response rate for 2024-25 was lower than in previous years: 18.7% of those eligible to take part did so, and therefore a little over 80% of those eligible did not take part. It is possible that this may affect the accuracy of the estimates produced, although it is worth noting that the level of change in estimates compared with 2022-23 is no higher than would typically be expected between survey years. For a more general discussion on how to interpret social survey response rates, and their relationship to non-response bias, see the Survey Futures network position statement on response rates.

- Differences in the composition of the achieved sample compared with the general population and with previous years of the survey. Compared with the 2022-23 unweighted achieved sample, the 2024-25 sample has a number of differences such as more Welsh speakers, fewer people aged 25-54, more people aged 75+, more people with higher-level qualifications, fewer people with no qualifications, fewer working people, and more people in rural areas. In terms of household type, there were more single-person households in the sample for 2024-25, and fewer couples (with or without children). There were fewer people in the areas of lowest income and employment, and more in areas where people have higher levels of qualification and skills. There was also a less even spread of interviews across the year (for example, proportionately fewer in February and March) compared with previous years. Weights have been produced to bring the sample closer to the wider population in terms of age, sex, and local authority size, as well as to compensate for variation from target in the numbers of interviews in each health board within each quarter. The weighting reduces but will not eliminate the effects of differences in the achieved sample compared with previous years and with the population.

- Differences between the 2024-25 results and previous years could represent real changes or could be due to changes in how the survey was carried out compared with previous years; or both. Work is underway to investigate any differences in results and further, topic-specific reports will be published in due course. While this work continues, we recommend care when making comparisons between 2024-25 results and those from previous years. Where possible, comparisons (particularly apparent changes over time) should be considered in the context of results from other data sources.

- There are also potential mode effects (respondents answering differently over the telephone compared with how they would answer face-to-face) which may have led to differences in the 2024-25 results compared with results obtained under previous survey designs. The 2024-25 technical report, published September 2025, provides more detail on the issues encountered.

It is appropriate to use the statistics published from the 2024-25 survey. At a national level, estimates continue to provide a reasonable indication of estimates for Wales, particularly when used alongside alternative sources. However, reflecting the issues discussed above, the 2024-25 first release and all other outputs based on the 2024-25 data are being published as official statistics in development, with temporary suspension of accredited official statistics. From 2026-27 the survey will switch to an online-first design, and it is planned to request that accredited official statistics be reinstated for 2026-27 onwards.

Smaller limitations that are common to all survey years

- The survey does not cover people living in communal establishments (e.g. care homes, residential youth offender homes, hostels, and student halls).

- Although care has been taken to make the questions as accessible as possible, there will still be instances where respondents do not respond accurately, for example because they have not understood the question correctly or for some reason they are not able or do not wish to provide an accurate answer. Again, this will affect the accuracy of the estimates produced.

- A proportion of respondents (around 6%) who completed the first section by telephone choose not to complete the second, online section.

Several of the strengths and limitations mentioned in this section are connected to the accuracy of the results. Accuracy is discussed in more detail in the following section.

Accuracy

The closeness between an estimated result and the (unknown) true value.

The main threats to accuracy are sources of error, including sampling error and non-sampling error.

Sampling error

Sampling error arises because the estimates are based on a random sample of the population rather than the whole population. The results obtained for any single random sample are likely to vary by chance from the results that would be obtained if the whole population was surveyed (i.e. a census), and this variation is known as the sampling error. In general, the smaller the sample size the larger the potential sampling error.

For a random sample, sampling error can be estimated statistically based on the data collected, using the standard error for each variable. Standard errors are affected by the survey design, and can be used to calculate confidence intervals in order to give a more intuitive idea of the size of sampling error for a particular variable. These issues are discussed in the following subsections.

Effect of survey design on standard errors

The survey was stratified at local authority level, with different probabilities of selection for people living in different local authorities. Weighting was used to correct for these different selection probabilities, to ensure the results reflect the population characteristics (age and sex) of each local authority, and to correct for unevenness in achievement over time.

One of the effects of this complex design and of applying survey weights is that standard errors for the survey estimates are generally higher than the standard errors that would be derived from a simple random sample of the same size [footnote 1].

The ratio of the standard error of a complex sample to the standard error of a simple random sample (SRS) of the same size is known as the design factor, or “deft”. If the standard error of an estimate in a complex survey is calculated as though it has come from an SRS survey, then multiplying that standard error by the deft gives the true standard error of the estimate which takes into account the complex design.

The ratio of the sampling variance of the complex sample to that of a simple random sample of the same size is the design effect, or “deff” (which is equal to the deft squared). Dividing the actual sample size of a complex survey by the deff gives the “effective sample size”. This is the size of an SRS that would have given the same level of precision as did the complex survey design.

All cross-analyses produced by the National Survey team, for example in bulletins and in the tables and charts available in our results viewer, take account of the design effect for each variable.

Confidence intervals (‘margin of error’)

Because the National Survey is based on a random sample, standard errors can be used to calculate confidence intervals, sometimes known as the ‘margin of error’, for each survey estimate. The confidence intervals for each estimate give a range within which the ‘true’ value for the population is likely to fall (that is, the figure we would get if the survey covered the entire population).

The most commonly-used confidence interval is a 95% confidence interval. If we carried out the survey repeatedly with 100 different samples of people and for each sample produced an estimate of the same particular population characteristic (e.g. satisfaction with life) with 95% confidence intervals around it, the exact estimates and confidence intervals would all vary slightly for the different samples. But we would expect the confidence intervals for about 95 of the 100 samples to contain the true population figure.

The larger the confidence interval, the less precise an estimate is.

95% confidence intervals have been calculated for a range of National Survey variables and are included in the technical report for 2024-25. These intervals have been adjusted to take into account the design of the survey, and are larger than they would be if the survey had been based on a simple random sample of the same size. They equal the point estimate plus or minus approximately 1.96 * the standard error of the estimate [footnote 2]. Confidence intervals are also included in all the charts and tables of results available in our Results viewer.

Confidence intervals can also be used to help tell whether there is a real difference between two groups (one that is not just due to sampling error, i.e. the particular characteristics of the people who happened to take part in the survey). As a rough guide to interpretation: when comparing two groups, if the confidence intervals around the estimates overlap then it can be assumed that there is no statistically significant difference between the estimates. This approach is not as rigorous as doing a formal statistical test, but is straightforward, widely used and reasonably robust.

Note that compared with a formal test, checking to see whether two confidence intervals overlap is more likely to lead to “false negatives”: incorrect conclusions that there is no real difference, when in fact there is a difference. It is also less likely than a formal test to lead to “false positives”: incorrect conclusions that there is a difference when there is in fact none. However, carrying out many comparisons increases the chance of finding false positives. So when many comparisons are made, for example when producing large numbers of tables of results containing confidence intervals, the conservative nature of the test is an advantage because it reduces (but does not eliminate) the chance of finding false positives.

Non-sampling error

“Non-sampling error” means all differences between the survey estimates and true population values except the differences due to sampling error. Unlike sampling error, non-sampling error is present in censuses as well as sample surveys. Types of non-sampling error include: coverage error, non-response error, measurement error and processing error.

It is not possible to eliminate non-sampling error altogether, and it is not possible to give statistical estimates of the size of non-sampling error. Substantial efforts have been made to reduce non-sampling error in the National Survey. Some of the key steps taken are discussed in the following subsections.

Measurement error: question development

To reduce measurement error, harmonised or well-established questions are used in the survey where possible. New questions are developed by survey experts and many have been subject to external peer review, with a subset also being cognitively tested with members of the public. Reports on question review and testing are available on the National Survey webpages.

Non-response

Non-response (i.e. individuals who are selected but do not take part in the survey) is a key component of non-sampling error. Response rates are monitored closely.

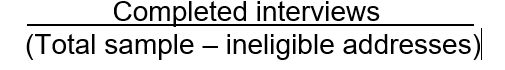

The response rate is the proportion of eligible addresses that yielded an interview, and is defined here as

As noted earlier the survey results are weighted to take account of differential non-response across age and sex population subgroups, i.e. to ensure that the age and sex distribution of the responding sample matches that of the population of Wales. This step is designed to reduce the non-sampling error due to differential non-response by age and sex. For 2024-25, the weights also adjusted for unevenness of achievement over the year.

Missing answers

Missing answers occur for several reasons including refusal or inability to answer a particular question, and cases where the question is not applicable to the respondent. The level of missing values was minimised by not actively presenting refusal and Don’t Know codes to respondents in the interviewer-led section (although these are generally presented to respondents in the self-completion section, for ethical reasons). Missing answers are usually omitted from tables and analyses, except where they are of particular interest (e.g. a high level of “Don’t know” responses may be of substantive interest).

Measurement error: interview quality checks

Another potential cause of bias is interviewers systemically influencing responses in some way. It is likely that responses will be subject to effects such as social desirability bias (where the answer given is affected by what the respondent perceives to be socially acceptable or desirable). Extensive interviewer training is provided to minimise this effect and interviewers are also closely supervised, with a proportion of interviews verified by interviewer managers listening to recordings of telephone interviews or following up face-to-face interviews with telephone or in-person validation with the respondent.

The questionnaire was administered using a computerised script. This approach allows the interviewer to provide some additional explanation where it is clear that the reason for asking the question is not understood by that respondent. To help them do this, interviewers are provided with background information on some of the questions at the interviewer briefings that take place before fieldwork begins. The script also contains additional information where prompts or further explanations have been found to be needed. However, interviewers are made aware that it is vital to present questions and answer options exactly as set out in the CATI/CAPI script.

Some answers given are reflected in the wording of subsequent questions or checks (e.g. the names of children given are mentioned in questions on children’s schools). This helps the respondent (and interviewer) understand and answer the questions correctly.

A range of logic checks and interviewer prompts are included in the script to make sure the answers provided are consistent and realistic. Some of these checks are ‘hard checks’: that is, checks used in cases where the respondent’s answer is not consistent with other information previously given by the respondent. In these cases the question has to be asked again, and the response changed, in order to proceed with the interview. Other checks are ‘soft checks’, for responses that seem unlikely (either because of other information provided or because they are outside the usual range) but could be correct. In these cases the interviewer is prompted to confirm with the respondent that the response is indeed correct.

Similar checks are included in the self-completion section of the survey. Respondents are able to contact their interviewer or the survey enquiry line if they have any difficulties. Checks are in place to ensure the online section is completed by the same person who was selected to complete the telephone section.

Processing error: data validation

The main survey outputs are SPSS data files that were delivered after the end of the year’s fieldwork. Two main data files were provided by the survey contractor:

- A household dataset, containing details about each member of each household taking part in the survey: that is, responses to the enumeration grid and any information asked of the respondent about other members of the household; and

- A respondent dataset, containing each respondent’s answers.

Each dataset was checked by the survey contractor. A set of checks on the content and format of the datasets was then carried out by Welsh Government and amendments made by the contractor.

Timeliness and punctuality

Timeliness refers to the lapse of time between publication and the period to which the data refers. Punctuality refers to the time lag between the actual and planned dates of publication.

Results were released around five months after the end of the fieldwork period. This period has been kept as short as possible to ensure that results are as timely as possible while still being fully quality-assured.

More detailed topic-specific reporting will follow, guided by the needs of survey users.

Accessibility

Accessibility is the ease with which users are able to access the data, also reflecting the format(s) in which the data are available and the availability of supporting information. Clarity refers to the quality and sufficiency of the metadata, illustrations and accompanying advice.

Publications

All reports are available to download from the National Survey web pages. The National Survey web pages have been designed to be easy to read and to navigate.

Detailed charts and tables of results are available via an interactive results viewer. Because there are hundreds of variables in the survey and many thousands of possible analyses, only a subset are included in the results viewer. However further tables / charts can be produced quickly on request.

For further information about the survey results, or if you would like to see a different breakdown of results, contact the National Survey team at surveys@gov.wales or on 0300 256 685.

Disclosure control

We take care to ensure that individuals are not identifiable from the published results. We follow the requirements for confidentiality and data access set out in the Code of Practice for Statistics.

Language requirements

We comply with the Welsh language standards for all our outputs. Our website, first releases, and Results viewer are published in both Welsh and English.

We aim to write clearly, using plain English / ‘Cymraeg Clir’.

UK Data Archive

Anonymised versions of the survey datasets (from which some information is removed to ensure confidentiality is preserved), together with supporting documentation, are deposited with the UK Data Archive in the autumn following the end of the fieldwork period. These datasets may be accessed by registered users for specific research projects.

From time to time, researchers may need to analyse more detailed data than is available through the Data Archive. Requests for such data should be made to the National Survey team (see contact details below). Requests are considered on a case by case basis, and procedures are in place to keep data secure and confidential.

Methods and definitions

We publish a Terms and definitions document explaining key terms.

An interactive question viewer and copies of the questionnaires are available on our web pages.

Comparability and coherence

The degree to which data can be compared over both time and domain.

Throughout National Survey statistical bulletins and releases, we highlight relevant comparators as well as information sources that are not directly comparable but provide useful context.

Comparisons with other countries

Wherever possible, survey questions are taken from surveys that run elsewhere. This allows for some comparisons across countries to be made (although differences in design and context may affect comparability).

Comparisons over time

Although the telephone-first survey covers many of the same topics as the face-to-face survey that ran pre-April 2020, in some cases using the same or only slightly adapted questions, care should be taken when making comparisons over time. The change of mode could affect the results in a variety of ways. For example, different types of people may be more likely to take part in the different modes; or the modes may affect how people answer questions [footnote 3]. Comparability is likely to be more problematic for less-factual questions (e.g. about people’s views on public services, as opposed to more-factual questions like whether they have used these services in a particular time period). However, for many topics results are similar across the changes in mode.

As noted earlier, differences between the 2024-25 results and previous years could represent real changes or could be due to changes in how the survey was carried out compared with previous years; or both. Work is underway to investigate any differences in results. While this work continues, we recommend care when making comparisons between 2024-25 results and those from previous years. Where possible, comparisons (particularly apparent changes over time) should be considered in the context of results from other data sources.

Footnotes

[1] Survey estimates themselves (as opposed to the standard errors and confidence intervals for those estimates) are not affected by the survey design.

[2] The value of 1.96 varies slightly according to the sample size for the estimate of interest.

[3] See 'Mixing modes within a social survey: opportunities and constraints for the National Survey for Wales', section 3.2.3 for a fuller review.

Contact details

Chris McGowan

Email: surveys@gov.wales

Media: 0300 025 8099